HRL-PPO-USV Model

Model Description

This is a Hierarchical Reinforcement Learning (HRL) model using Proximal Policy Optimization (PPO) trained for Unmanned Surface Vehicle (USV) control tasks.

Repository Structure

├── model/ # Trained model files

│ ├── hrl-v1-policy-weight.zip # model weights and configuration

│ └── hrl-v1-vecnormalize.pkl # VecNormalize wrapper for observation normalization

└── README.md # This README file

Model Performance

Comparing the performance between the HRL and End-to-End DRL model acme-d4pg-usv.

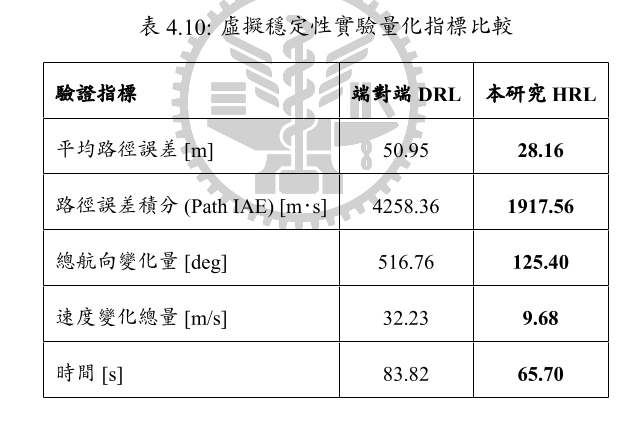

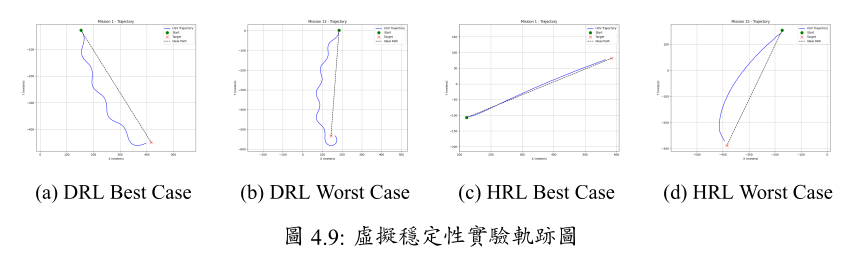

Simulation Results

- Stablility test: Straight-line navigation

HRL outperforms traditional end-to-end DRL in point-to-point straight-line navigation across path-following acccuracy, control smoothness and overall efficiency.

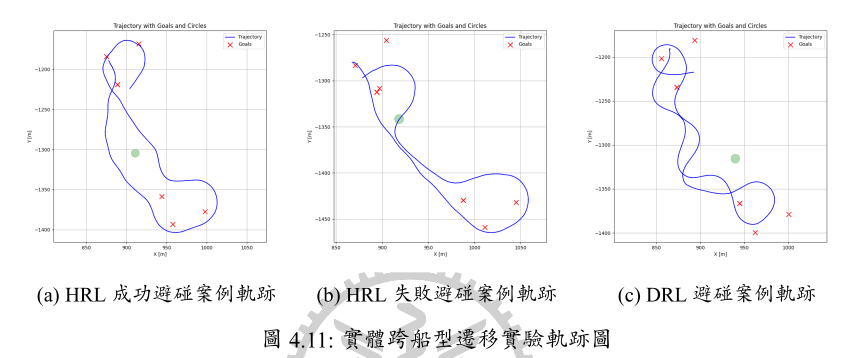

- Obstacle avoidance test

HRL demonstrates safer, more efficient and more reliable decision-making when encounter obstacles.

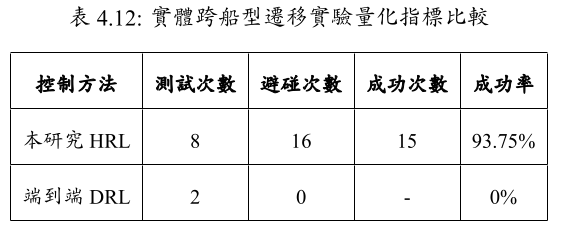

Real-World Deployment

Zero-shot deployment

Zero-shot deployment from the trained Jong-shyn No.5 simulator to goint-js untested vessel. The experiment employs the LegRun behavior defined in the MOOS-IvP framework. 150m leg distance and 25m waypoint turn radius.

Inferencing

Refer to HRL Inference

Training

Refer to HRL Training

- Algorithm: PPO

- Framework: Stable Baselines3, MOOS-IvP, ROS

- Task: USV Collidsion Avoidance

- Downloads last month

- 6