The dataset viewer is not available for this dataset.

Error code: ConfigNamesError

Exception: RuntimeError

Message: Dataset scripts are no longer supported, but found argmicro.py

Traceback: Traceback (most recent call last):

File "/src/services/worker/src/worker/job_runners/dataset/config_names.py", line 66, in compute_config_names_response

config_names = get_dataset_config_names(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/inspect.py", line 161, in get_dataset_config_names

dataset_module = dataset_module_factory(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 1031, in dataset_module_factory

raise e1 from None

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 989, in dataset_module_factory

raise RuntimeError(f"Dataset scripts are no longer supported, but found {filename}")

RuntimeError: Dataset scripts are no longer supported, but found argmicro.pyNeed help to make the dataset viewer work? Make sure to review how to configure the dataset viewer, and open a discussion for direct support.

PIE Dataset Card for "argmicro"

This is a PyTorch-IE wrapper for the ArgMicro Huggingface dataset loading script.

Usage

from pie_datasets import load_dataset

from pie_documents.documents import TextDocumentWithLabeledSpansAndBinaryRelations

# load English variant

dataset = load_dataset("pie/argmicro", name="en")

# if required, normalize the document type (see section Document Converters below)

dataset_converted = dataset.to_document_type(TextDocumentWithLabeledSpansAndBinaryRelations)

assert isinstance(dataset_converted["train"][0], TextDocumentWithLabeledSpansAndBinaryRelations)

# get first relation in the first document

doc = dataset_converted["train"][0]

print(doc.binary_relations[0])

# BinaryRelation(head=LabeledSpan(start=0, end=81, label='opp', score=1.0), tail=LabeledSpan(start=326, end=402, label='pro', score=1.0), label='reb', score=1.0)

print(doc.binary_relations[0].resolve())

# ('reb', (('opp', "Yes, it's annoying and cumbersome to separate your rubbish properly all the time."), ('pro', 'We Berliners should take the chance and become pioneers in waste separation!')))

Dataset Variants

The dataset contains two BuilderConfig's:

de: with the original texts collection in Germanen: with the English-translated texts

Data Schema

The document type for this dataset is ArgMicroDocument which defines the following data fields:

text(str)id(str, optional)topic_id(str, optional)metadata(dictionary, optional)

and the following annotation layers:

stance(annotation type:Label)- description: A document may contain one of these

stancelabels:pro,con,unclear, or no label when it is undefined (see here for reference).

- description: A document may contain one of these

edus(annotation type:Span, target:text)adus(annotation type:LabeledAnnotationCollection, target:edus)- description: each element of

adusmay consist of several entries fromedus, so we requireLabeledAnnotationCollectionas annotation type. This is originally indicated bysegedges in the data. LabeledAnnotationCollectionhas the following fields:annotations(annotation type:Span, target:text)label(str, optional), values:opp,pro(see here)

- description: each element of

relations(annotation type:MultiRelation, target:adus)- description: Undercut (

und) relations originally target other relations (i.e. edges), but we let them target theheadof the targeted relation instead. The original state can be deterministically reconstructed by taking the label into account. Furthermore, the head of additional source (add) relations are integrated into the head of the target relation (note that this propagates alongundrelations). We model this withMultiRelations whoseheadandtailare of typeLabeledAnnotationCollection. MultiRelationhas the following fields:head(tuple, annotation type:LabeledAnnotationCollection, target:adus)tail(tuple, annotation type:LabeledAnnotationCollection, target:adus)label(str, optional), values:sup,exa,reb,und(see here for reference, but note that helper relationssegandaddare not there anymore, see above).

- description: Undercut (

See here for the annotation type definitions.

Document Converters

The dataset provides document converters for the following target document types:

pie_documents.documents.TextDocumentWithLabeledSpansAndBinaryRelationsLabeledSpans, converted fromArgMicroDocument'sadus- labels:

opp,pro - if an ADU contains multiple spans (i.e. EDUs), we take the start of the first EDU and the end of the last EDU as the boundaries of the new

LabeledSpan. We also raise exceptions if any newly createdLabeledSpans overlap.

- labels:

BinraryRelations, converted fromArgMicroDocument'srelations- labels:

sup,reb,und,joint,exa - if the

headortailconsists of multipleadus, then we buildBinaryRelations with allhead-tailcombinations and take the label from the original relation. Then, we buildBinaryRelations' with labeljointbetween each component that previously belongs to the sameheadortail, respectively.

- labels:

metadata, we keep theArgMicroDocument'smetadata, butstanceandtopic_id.

See here for the document type definitions.

Collected Statistics after Document Conversion

We use the script evaluate_documents.py from PyTorch-IE-Hydra-Template to generate these statistics.

After checking out that code, the statistics and plots can be generated by the command:

python src/evaluate_documents.py dataset=argmicro_base metric=METRIC

where a METRIC is called according to the available metric configs in config/metric/METRIC (see metrics).

This also requires to have the following dataset config in configs/dataset/argmicro_base.yaml of this dataset within the repo directory:

_target_: src.utils.execute_pipeline

input:

_target_: pie_datasets.DatasetDict.load_dataset

path: pie/argmicro

revision: 28ef031d2a2c97be7e9ed360e1a5b20bd55b57b2

name: en

For token based metrics, this uses bert-base-uncased from transformer.AutoTokenizer (see AutoTokenizer, and bert-based-uncased to tokenize text in TextDocumentWithLabeledSpansAndBinaryRelations (see document type).

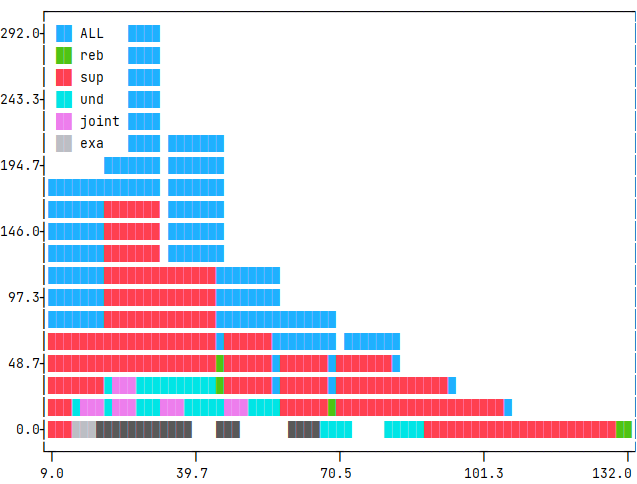

Relation argument (outer) token distance per label

The distance is measured from the first token of the first argumentative unit to the last token of the last unit, a.k.a. outer distance.

We collect the following statistics: number of documents in the split (no. doc), no. of relations (len), mean of token distance (mean), standard deviation of the distance (std), minimum outer distance (min), and maximum outer distance (max). We also present histograms in the collapsible, showing the distribution of these relation distances (x-axis; and unit-counts in y-axis), accordingly.

Command

python src/evaluate_documents.py dataset=argmicro_base metric=relation_argument_token_distances

| len | max | mean | min | std | |

|---|---|---|---|---|---|

| ALL | 1018 | 127 | 44.434 | 14 | 21.501 |

| exa | 18 | 63 | 33.556 | 16 | 13.056 |

| joint | 88 | 48 | 30.091 | 17 | 9.075 |

| reb | 220 | 127 | 49.327 | 16 | 24.653 |

| sup | 562 | 124 | 46.534 | 14 | 22.079 |

| und | 130 | 84 | 38.292 | 17 | 12.321 |

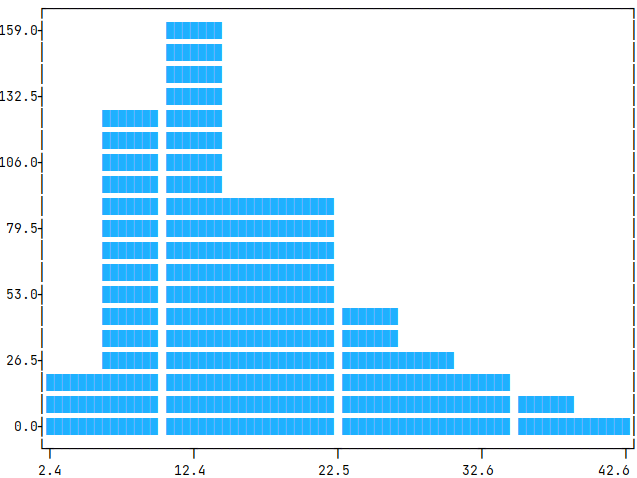

Span lengths (tokens)

The span length is measured from the first token of the first argumentative unit to the last token of the particular unit.

We collect the following statistics: number of documents in the split (no. doc), no. of spans (len), mean of number of tokens in a span (mean), standard deviation of the number of tokens (std), minimum tokens in a span (min), and maximum tokens in a span (max). We also present histograms in the collapsible, showing the distribution of these token-numbers (x-axis; and unit-counts in y-axis), accordingly.

Command

python src/evaluate_documents.py dataset=argmicro_base metric=span_lengths_tokens

| statistics | train |

|---|---|

| no. doc | 112 |

| len | 576 |

| mean | 16.365 |

| std | 6.545 |

| min | 4 |

| max | 41 |

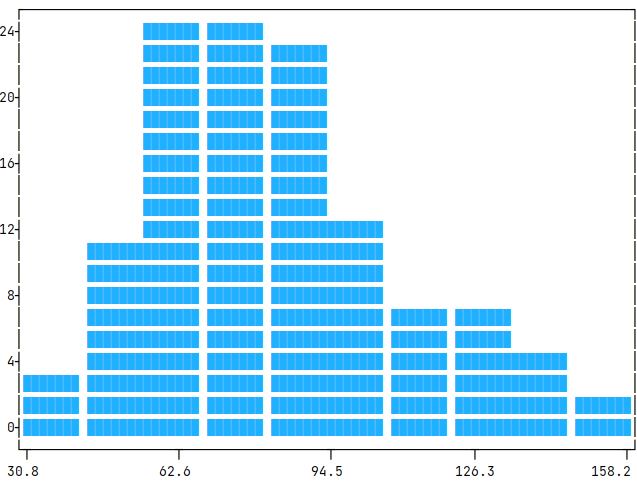

Token length (tokens)

The token length is measured from the first token of the document to the last one.

We collect the following statistics: number of documents in the split (no. doc), mean of document token-length (mean), standard deviation of the length (std), minimum number of tokens in a document (min), and maximum number of tokens in a document (max). We also present histograms in the collapsible, showing the distribution of these token lengths (x-axis; and unit-counts in y-axis), accordingly.

Command

python src/evaluate_documents.py dataset=argmicro_base metric=count_text_tokens

| statistics | train |

|---|---|

| no. doc | 112 |

| mean | 84.161 |

| std | 22.596 |

| min | 36 |

| max | 153 |

- Downloads last month

- 95