Context Cascade Compression: Exploring the Upper Limits of Text Compression

Usage

from transformers import AutoModel, AutoTokenizer

model_name = 'liufanfanlff/C3-Context-Cascade-Compression'

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

model = AutoModel.from_pretrained(model_name , trust_remote_code=True, low_cpu_mem_usage=True, device_map='cuda', use_safetensors=True, pad_token_id=tokenizer.eos_token_id)

model = model.eval().cuda()

prompt = 'Repeat the text: '

context = "帝高阳之苗裔兮,朕皇考曰伯庸。摄提贞于孟陬兮,"

#context = "lfflfflfflfflfflfflfflfflff"

outputs = model.chat(tokenizer, context, prompt)

print ("Repeat the text: ",outputs)

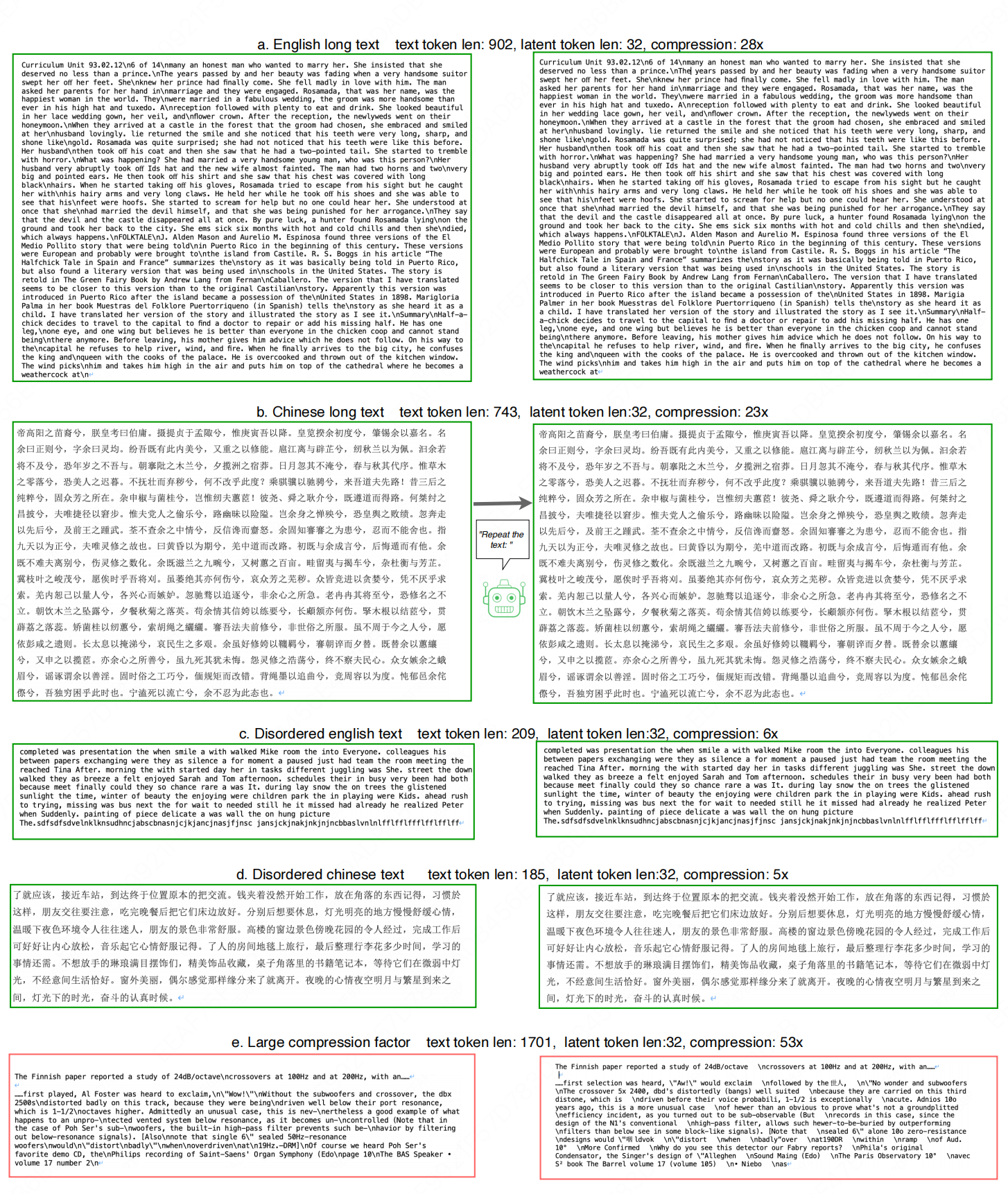

viz

Contact

Don't hesitate to contact me by email, [email protected], if you have any questions.

Acknowledgement

- DeepSeek-OCR: the idea originated from reconsideration of this work.

- GOT-OCR2.0: the code was adapted from GOT-OCR2.0.

- Qwen: the LLM base model of C3.

Citation

@article{liu2025context,

title={Context Cascade Compression: Exploring the Upper Limits of Text Compression},

author={Liu, Fanfan and Qiu, Haibo},

journal={arXiv preprint arXiv:2511.15244},

year={2025}

}

- Downloads last month

- 529